On October 6th, the Rutgers Graduate School of Biomedical Sciences invited Dr. Eric Prager, Editor-in-Chief of the Journal of Neuroscience Research, to speak about the problem of scientific reproducibility, how to avoid common pitfalls in conducting research, and best practices for publishing a scientific paper. (Interested in science publishing as a career and/or how Dr. Prager became an editor at Wiley? Read my previous post).

Over the last few years, the issue of the reproducibility of scientific research has been consistently in the spotlight and has led to many discussions within the scientific community of whether a reproducibility issue indeed exists, and if so, how to address it effectively. Dr. Prager thinks that the increasing number of retractions and irreproducible scientific research being published means that we are facing a major problem, and he has dedicated his time and efforts in order to address them proactively. He stated that there are two general causes for a retraction: 1) honest error or 2) more insidious fraud & misconduct. While the only answer to the second reason is to not do it, Dr. Prager went on to elaborate on how to reduce honest mistakes in our scientific work. He believes that action is necessary on both sides from authors/researchers as well as the publishing companies in order for meaningful change to occur.

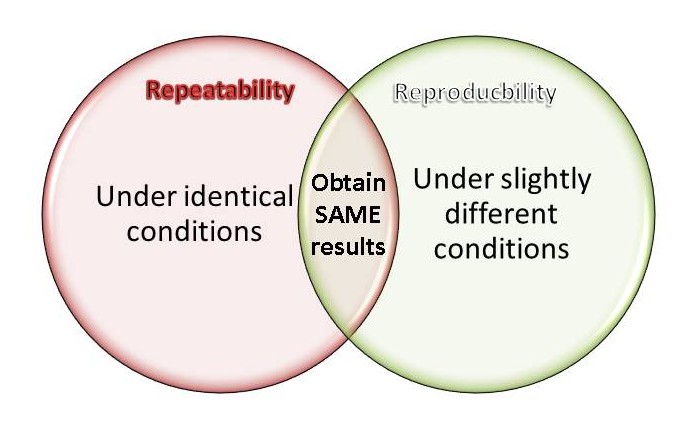

Repeatability vs Reproducibility

Scientific discovery progresses by building on the foundation of previous discoveries. In order for this to happen, two critical criteria must be fulfilled: a scientific work must be repeatable and reproducible. Dr. Prager defines and differentiates the two as:

- Repeatability – ability to replicate your own experiments à convince yourself and others of the validity of your work, a prerequisite to even considering publishing your work

- Reproducibility – ability to replicate someone else’s work à guarantees objectivity of the study’s results, moves scientific progress forward

Why is scientific work so hard to reproduce?

A recent Nature survey polled over 1500 researchers from multiple disciplines on reproducibility, and 70% of their respondents revealed having tried and failed to reproduce someone else’s experiments. Dr. Prager discussed some of the reasons for difficulties in reproducing someone else’s published work:

- Publication and selection bias – Let’s face it; the pressure to publish is a constant in every scientists’ life. According to the survey mentioned above, this is one of the most top-rated factors that contribute to irreproducible research. Most journals only want to publish positive results, which can lead to selective reporting and an increase in the number of false positives.

- Poor experimental design – the scientific method ideally proceeds as follows: 1) formulate a hypothesis, 2) define objectives, 3) design experiments, 4) analyze results, and 5) formulate a conclusion, based on whether your results support your hypothesis or not. Before even performing a single experiment, devising a strategy for your experiments is one of the most critical steps in ensuring reliability and reproducibility of your work. The most common pitfalls in experimental design are a lack in specific details in the following: controls, replicates, power analysis, statistical analysis, exclusion criteria, how to identify and deal with outliers, and randomization.

- Poor statistical design – Sadly, not all biomedical scientists are well-versed in statistics; in fact, I would say that in general, statistics is not a focus in most graduate programs and there is a dire need for improved training in statistical analysis. As a result, a lot of researchers fail to analyze their results correctly, or they use improper statistics in analyzing and reporting their data. The experimental design MUST include a statistical design, but sadly, this is also an oft-overlooked step.

- Incomplete or poorly written methods – The methods section of a journal article is the most important portion for researchers who want to reproduce the reported results. More and more, methods sections are getting shorter, and less detail is being included, which ultimately leads to difficulties in replicating the experiment. This is in part because most journals have page restrictions, and may not even require inclusion of full methods in the paper. Dr. Prager says that for his journal, he is now requiring fully detailed methods to be included in submissions, which will not only facilitate the review process but also will be helpful to readers in fully understanding the reported study.

- Poor data representation – Lastly, even though the experimental and statistical designs are impeccable, another pitfall researchers encounter is failure to convey their data in the most informative way possible. Dr. Prager cites the bar graph as an example of a poor visual representation of data in scientific literature. According to an editorial published in the European Journal of Neuroscience by Rousselet et al (2016), bar graphs are useful representations of categorical data; however, misusing these to illustrate continuous data results in the loss of information and misrepresentation.

How to Publish a Reproducible Scientific Work

So how then, can we ensure greater reproducibility in published scientific work? Dr. Prager recently published an excellent editorial in the Journal of Neuroscience Research on this topic that touched upon the pitfalls mentioned above. He said that he is committed to ensuring greater rigor in his journal’s requirements on manuscript submissions. For example, he now requires all submissions to include full experimental and statistical methods. For the methods section, the journal also requires including a Research Resource ID (RRID), an authentication tag for all biological resource tools, including antibodies, transgenic mouse lines, cell lines, and software. The journal also has extensive guidelines on both statistical and data reporting as well as graphical representation of the data, detailing requirements briefly mentioned in his talk.

Dr. Prager also offered several useful tips for writing a scientific paper. My summary below echoes his approach of emphasizing the first 3 guidelines of Dr. John Shaw Billings’ “Golden Rules” on publishing a journal article:

1. Have something to say – T he first step to a good scientific paper is good scientific work. DO GOOD SCIENCE. Design your experiments with as much detail as possible. Write down everything for every step (detailed reagent lists, protocols, controls, replicates, notes). Analyze your data correctly, using appropriate statistical analysis. If you feel like you are insufficiently trained in statistics, get training! Or else, seek advice and help from a statistician DURING the experimental design, NOT AFTER the experiments have been performed.

he first step to a good scientific paper is good scientific work. DO GOOD SCIENCE. Design your experiments with as much detail as possible. Write down everything for every step (detailed reagent lists, protocols, controls, replicates, notes). Analyze your data correctly, using appropriate statistical analysis. If you feel like you are insufficiently trained in statistics, get training! Or else, seek advice and help from a statistician DURING the experimental design, NOT AFTER the experiments have been performed.

2. Say it – You wa nt to be understood, and so report your work in the most illuminating and informative way as possible. Be concise and accurate. Figure out where you want to submit your article, and follow the journal’s guidelines closely. If English is not your first language, do not be afraid to ask for help. Be as detailed as you can in your methods section. Present and interpret your data correctly. Think like a reviewer, and if available, read the journal’s reviewer guide. Lastly, DO NOT PLAGIARIZE.

nt to be understood, and so report your work in the most illuminating and informative way as possible. Be concise and accurate. Figure out where you want to submit your article, and follow the journal’s guidelines closely. If English is not your first language, do not be afraid to ask for help. Be as detailed as you can in your methods section. Present and interpret your data correctly. Think like a reviewer, and if available, read the journal’s reviewer guide. Lastly, DO NOT PLAGIARIZE.

3. Stop as soon as you have said it – I have, and so I will.

For more information, a podcast of Dr. Prager’s talk and presentation slides is available on the Rutgers GSBS website: http://rwjms.rutgers.edu/education/gsbs/index.html

For additional reading:

- Eassom H. The Costs of Research Misconduct. Wiley Exchanges. https://hub.wiley.com/community/exchanges/discover/blog/2016/05/11

- Baker M. 1,500 scientists lift the lid on reproducibility. Nature News Feature. http://www.nature.com/news/1-500-scientists-lift-the-lid-on-reproducibility-1.19970#/correction1

- Rousselet GA, Foxe JJ, and Bolam JP. 2016. A few simple steps to improve the description of group results in neuroscience. European Journal of Neuroscience. http://onlinelibrary.wiley.com/doi/10.1111/ejn.13400/full

- Weissgerber TL et al. 2016. Transparent reporting for reproducible science. Journal of Neuroscience Research. http://onlinelibrary.wiley.com/doi/10.1002/jnr.23785/abstract

- Eassom H. 10 Types of plagiarism in research. Wiley Exchanges. https://hub.wiley.com/community/exchanges/discover/blog/2016/02/02/10-types-of-plagiarism-in-research